DevOps Services and Consulting

Our DevOps services can save you up to 30% of your development and maintenance budget by eliminating server resource waste, shortening the release cycle, and ensuring codebase stability.

DevOps Services We Offer

Our DevOps experts can boost your IT operations and infrastructure efficiency at any step of your project with provided services:

DevOps Tools & Technologies

Our DevOps consultants will help you select and implement the best-fit tools and technologies for automation, deployment, codebase and infrastructure management:

Infrastructure as a Code

-

Helm

-

Terraform

-

Azure ARM Templates

Azure ARM Templates

-

CloudFormation

CI/CD

-

Jenkins

-

AWS CodePipeline

-

TeamCity

-

Azure DevOps

-

Docker

-

Kubernetes

Automation and orchestration

-

Ansible

-

Puppet

-

Python

-

Bash

-

Kubernetes

-

Docker

Cloud and infrastructure providers

-

AWS

-

Google Cloud

-

Microsoft Azure

-

Digital Ocean

Monitoring & Logging

-

DataDog

-

Prometheus

-

Nagios

Nagios

-

Elasticsearch

-

Kibana

-

Grafana

-

Amazon CloudWatch

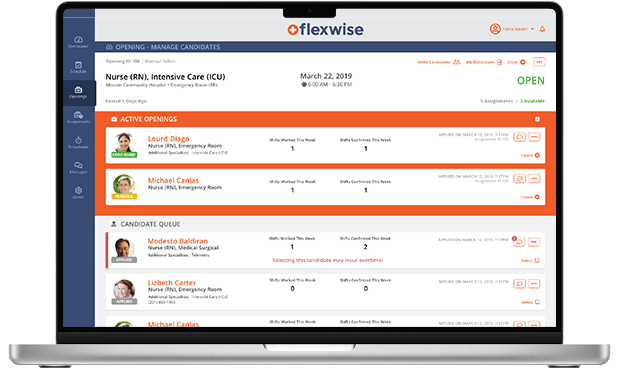

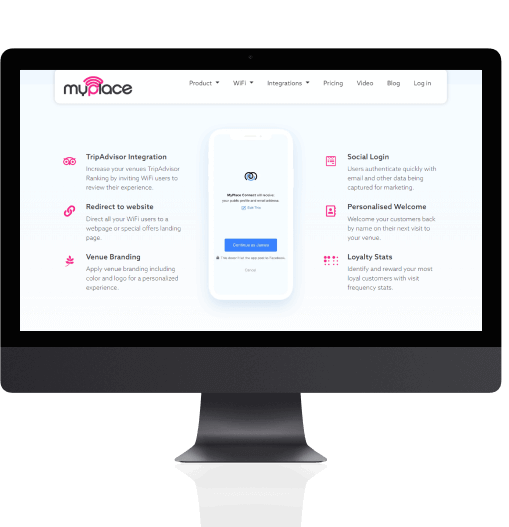

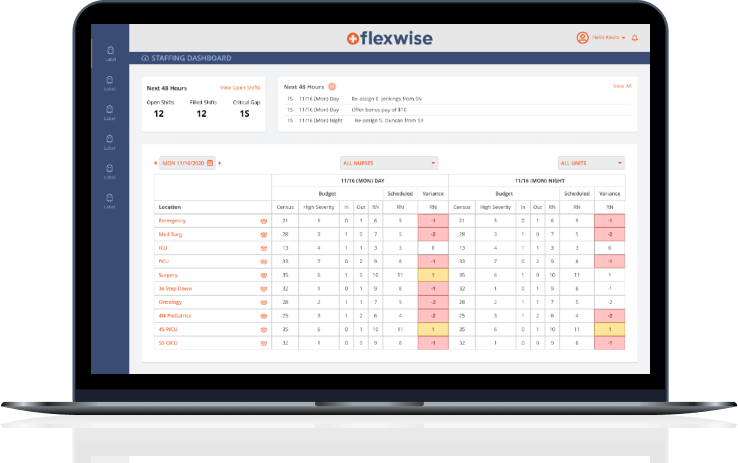

Success Stories

Boost Your Business Growth with Our DevOps Solutions

Our DevOps consultants offer solid, long-term solutions for your software delivery and maintenance needs, boosting team collaboration, streamlining workflows, and optimizing your long-term infrastructure costs.

-

Leveraging Pipelines and Cloud Adoption

Our DevOps consulting services enable a decrease in cloud infrastructure expenditures by up to 50%. -

Automated CI/CD across Cloud Platforms

We use proven technologies for building CI/CD pipelines, ensuring you can quickly deploy your system to preferred cloud platforms or bare metal. -

End-to-End Delivery Automation

Intensive process automation enables our DevOps team to reduce delivery time from several hours to minutes, resulting in 85% increased team productivity. -

Continuous Integration and Development

Our DevOps experts offer continuous integration and development to attain high infrastructure resilience and security. -

Scalable DevOps for Modern Applications

We can efficiently manage the growing scope of DevOps work, reorganizing infrastructure, ensuring automated scaling, or meeting compliance requirements.