The chatbot market is forecast to reach around 1.25 billion U.S. dollars in 2025, a significant increase from the 2016 market size of 190.8 million U.S. dollars. This growth underscores the growing recognition of chatbots as essential tools for modern businesses.

Artificial intelligence (AI) has emerged as a transformative force, revolutionizing how we interact with machines. One of the most exciting applications of AI services is conversational agents, commonly known as chatbots. These intelligent virtual entities hold the potential to enhance user experiences, streamline communication, and revolutionize customer engagement.

Discover the art of crafting chatbots effortlessly using OpenAI, a frontrunner in artificial intelligence. In this guide, we’ll navigate the basics of building a chatbot. Whether you’re a coding pro or a beginner, this article provides a straightforward path to creating smart and dynamic chatbots that elevate user experiences.

1

What is an AI-powered HR Chatbot, and What Are Its Capabilities?

An AI-powered HR chatbot, a virtual assistant, is a sophisticated software application that leverages artificial intelligence to emulate human conversations. This technology allows employees and HR personnel to ask questions or make requests and receive automated and personalized responses.

Key Capabilities of AI-Powered HR Chatbots:

- Data Access and Integration: They can access HR databases and other internal systems to retrieve relevant information quickly and accurately.

- Continuous Learning: AI chatbots learn from user interactions over time, which helps them improve the accuracy and relevance of the information they provide.

- 24/7 Support: They offer round-the-clock assistance, efficiently managing routine HR inquiries without human intervention.

- Personalized Guidance: These chatbots can provide tailored advice and support by analyzing employee profiles and histories.

- Analytics and Insights: They can offer valuable data-driven insights into employee engagement and other HR metrics.

These capabilities stem from the advanced AI technologies integrated into the chatbots, enabling them to handle various HR functions effectively.

2

Top Use Cases of Chatbots in the Banking Industry and Their Benefits

AI technology is poised to revolutionize various functions within the banking sector. Experts anticipate that generative AI, particularly through applications like conversational banking chatbots, could generate an additional $200 billion to $340 billion in revenue and cost savings each year for banks, contributing 2.8% to 4.7% to the industry’s annual revenue.

This substantial boost is mainly due to the productivity enhancements and resource savings (human effort, time, and money) provided by GenAI applications. This section will highlight some practical uses of banking chatbots and their benefits.

Enhancing Employee Expertise and Retention

Effective onboarding and ongoing training are crucial in banking due to high turnover, complex regulations, and internal bank policies. However, banks often face hurdles related to:

- Scattered training resources across disconnected systems.

- One-size-fits-all onboarding that doesn’t adapt to roles or skill levels.

- Budget and time constraints lead to rushed programs.

- Weak employee progress monitoring, delaying gap identification and improvements.

- Lack of post-onboarding refresher courses on the latest solutions and policies.

A chatbot can streamline these processes by:

- Serving as a central resource for human resources data.

- Guiding new hires through onboarding and tracking their progress.

- Quizzing, assessing, and suggesting learning materials.

- Creating personalized learning paths based on strengths and weaknesses.

- Monitoring individual and team progress for human resources and management insights.

- Providing admin tools for managing employee learning curves.

Banks are providing efficient in using AI chatbots for human resources processes. For example, Dutch AMRO Bank’s AI virtual assistant helped reduce attrition by 40%, while US SouthState Bank transformed summer interns into proficient professionals with its corporate ChatGPT.

Business Benefits:

- Quicker onboarding and training of new and existing employees.

- Reduced staff turnover.

- Greater employee expertise.

Establishing a Go-To Resource on Current Policies, Laws, and Industry Specifics

In the heavily regulated banking sector, the clear interpretation and application of policies and procedures are crucial. Bank employees must navigate vast information, spending considerable time sifting through laws, regulations, corporate processes, and other documents to find what they need.

This can lead to several issues:

- Manually searching for information consumes significant time.

- Overburdened employees need more time for accuracy checks.

- Employee responses to colleagues and customers may need to be revised.

An AI chatbot in banking can help address these challenges.

For instance, one European bank implemented generative AI to create an Environmental, Social, and Governance (ESG) virtual expert that provides employees with answers related to these topics while scouring extensive, unstructured documents.

A bank chatbot can serve as a go-to resource for employees, providing information on current policies, laws, and industry specifics. Designated employees can update it with the latest regulations or company policies, ensuring it remains current.

Business Benefits:

- Fewer hours were spent locating needed information.

- Unburdened employees.

- Improved data accuracy.

- Minimized overhead for basic inquiries.

Improving Customer Query Resolution

Using chatbots for customer service interactions allows support teams to focus on more complex queries. Chatbots can quickly access vast databases to resolve customer issues. This approach offers two valuable scenarios:

- Implementing a smart pre-screening and automatic querying bot to reduce the workload for human agents.

- Deploying a self-service bot that retrieves knowledge base information, enabling customers to resolve queries independently, regardless of language or location.

For instance, Morgan Stanley’s GPT-4-powered AI assistant helps financial advisors swiftly locate and synthesize answers from an extensive internal knowledge base.

Business Benefits:

- Reduced response times for customer queries and technical issues.

- Decreased employee workload.

3

Guide to Chatbot Creation

Creating chatbots is becoming increasingly popular in various spheres, such as customer support, interactive storytelling, programming, copywriting, education, healthcare, and e-commerce.

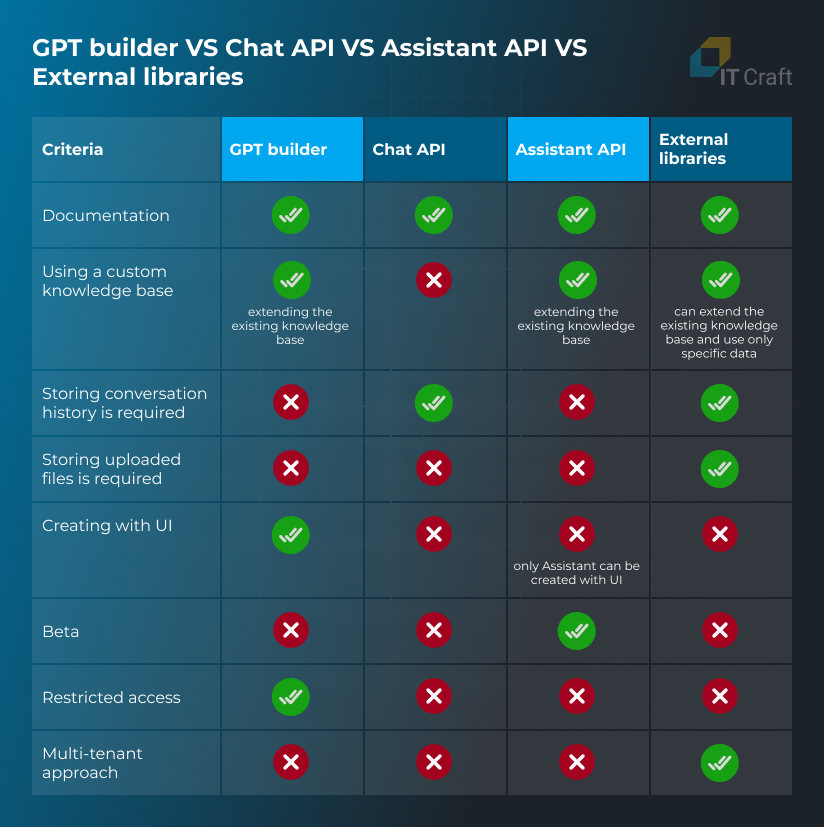

In some cases, specific data is needed to build a chatbot’s knowledge base; in others, general information available through OpenAI is sufficient. Sometimes, a chatbot may require a multi-tenant approach to allow different clients to personalize settings while following common logic.

How you implement a chatbot may differ depending on the tasks and requirements.

There are four ways to create an OpenAI chatbot:

- Using OpenAI’s GPT Builder

- Using OpenAI’s Chat API

- Using OpenAI’s Assistant API

- Using external libraries (such as langchain)

4

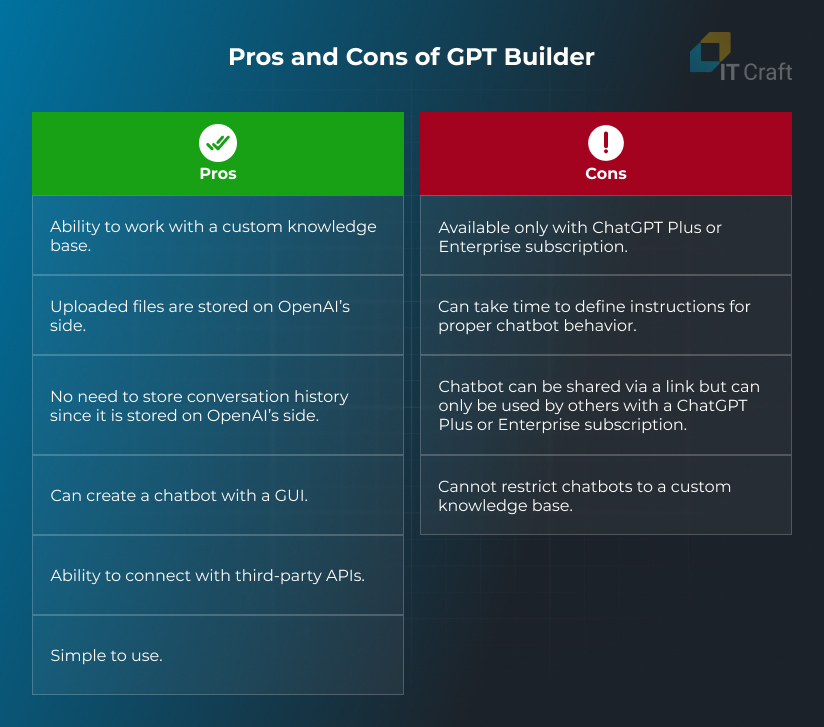

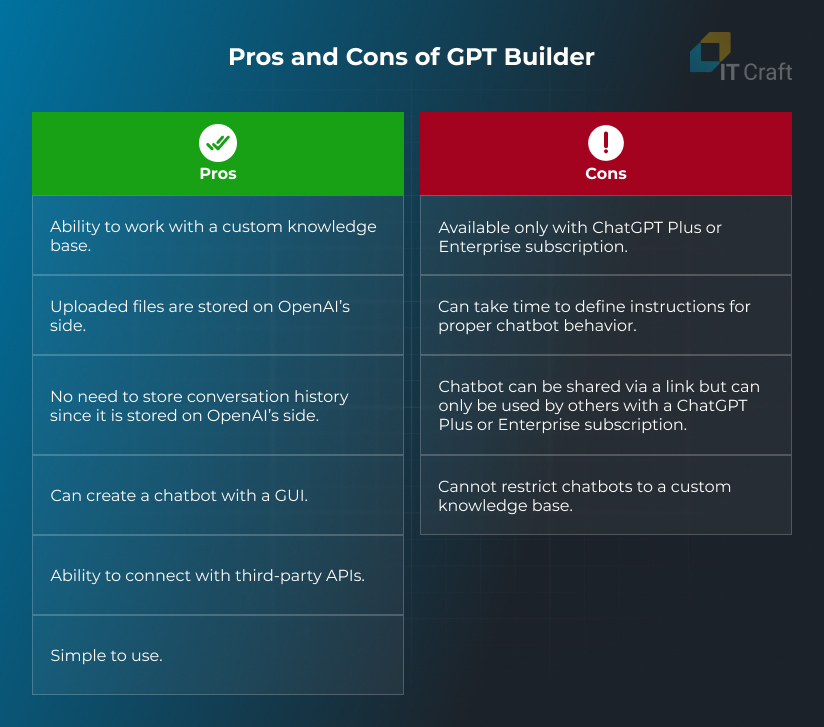

Creating a Chatbot Using OpenAI’s GPT Builder

This is a simple way to create a chatbot through a graphical user interface. GPT Builder is great for people who are not software developers but need to create a chatbot with certain behavioral requirements. Since GPT Builder works with natural language, it is easy for users to understand how to set up their chatbot.

This method is available only for ChatGPT Plus or Enterprise subscribers and a chatbot created this way can only be used by other Plus or Enterprise subscribers.

Other GPT Builder features include integrating the chatbot with third-party APIs to retrieve real-time data from external websites (e.g., city weather), connect to Google Calendar, and provide remote access to user devices.

To create a chatbot with GPT Builder, do the following:

GPT Builder will display as a split screen: the Create panel is where we enter prompts to build the chatbot, and the Preview panel allows us to interact with the chatbot as we build it, making it easier to refine it.

- Enter instructions in the message box of the Create page, then press Enter or Return.

- GPT Builder will then suggest a few things based on the instructions: a chatbot name, profile picture, and default conversation starters. We can accept the initial suggestions or ask GPT Builder to modify them.

- GPT Builder prompts us to enter more specific instructions to fine-tune the chatbot’s behavior. The best way to configure it is to start testing the chatbot in the Preview panel and add additional instructions according to its responses.

In GPT Builder, we can also upload files that will be used as a knowledge base, but this data won’t override the known OpenAI knowledge base. In addition, GPT Builder allows us to connect the chatbot with any third-party APIs and configure their interaction.

After creating the chatbot, we can share it via a link or make it public.

GPT Builder Use Case

You could use GPT Builder to create a chatbot that solves mathematical equations using ChatGPT plugins.

5

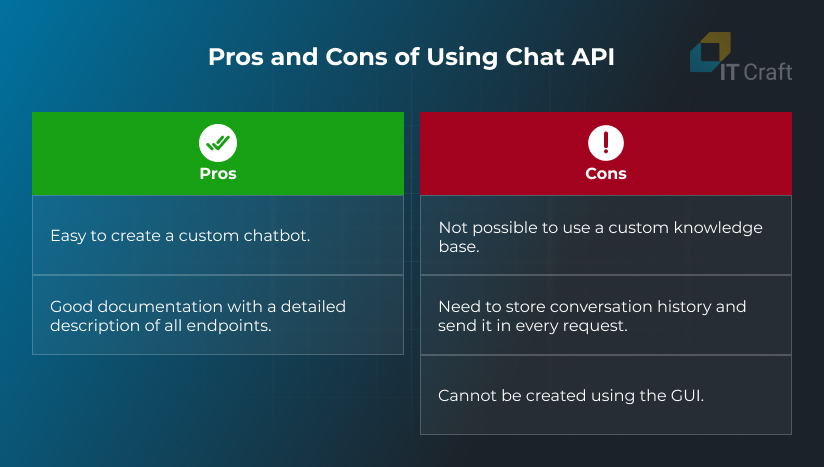

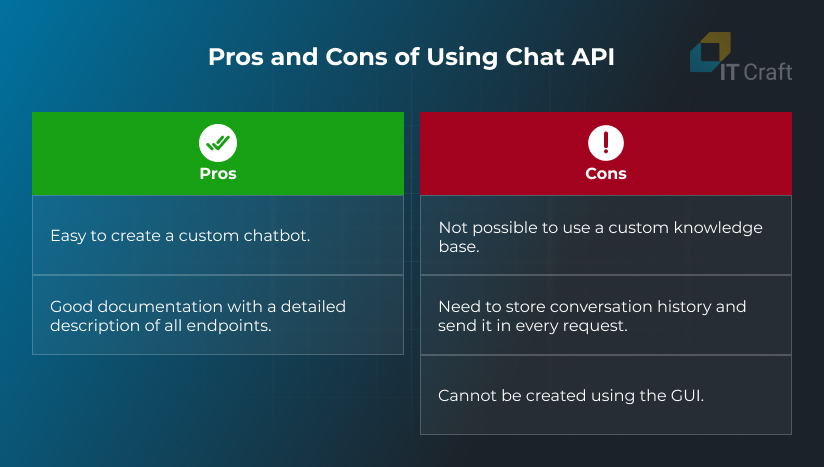

Creating a Chatbot Using OpenAI’s Chat API

This is the easiest way to create a chatbot programmatically. It involves a chat model taking a list of messages as an input and returning a model-generated message as an output. The API accepts instructions on how the chatbot should respond to user questions and return answers.

Including conversation history in the chatbot is important to help it understand the context and generate the correct answer. However, we may encounter a problem when the conversation history exceeds the context length.

As a solution, we can create and call a function to summarize the context and use that summary as part of the system message, which provides instructions or context for the chatbot. OpenAI’s entire knowledge base is used to generate a response.

We need to send an object with messages and model properties to the OpenAI Chat API to work with a chat conversation.

- model: The name of the OpenAI model that will process user data. It can be gpt-3.5-turbo or gpt-4.

- messages: A list of messages comprising the conversation so far. It contains objects with role and content properties.

- role: A value that allows OpenAI to understand what content is sent and how to process it. Possible values: system, user, assistant.

- content: A string that contains data entered by a user in the GUI or OpenAI’s response to the user’s question.

Based on the role value, OpenAI understands how to process the content. One possible role value is the system, which determines the chatbot’s behavior.

The user role tells OpenAI that data was sent from the user and should be processed (the question should be answered). The result of OpenAI’s processing (a response to the question) is then sent with the assistant role. For more on this, check out the Chat API documentation.

To create a chatbot with Chat API, we need the following:

- Log in to our OpenAI account.

- In the sidebar, select API keys (or follow the link).

- Create a new secret key.

Note: Viewing the API key later is impossible, so we need to copy and store this secret key somewhere immediately after it’s created. This key will be sent in each API request. If we lose it, we will need to generate a new key. - Start a conversation by sending the system message to establish the context.

A messages property value will be an array with one object that contains two properties: role (sent with the value “system”) and content (a string containing instructions for how the chatbot should behave). - Send user data.

The messages property value will be an array with one object that contains two properties: role (sent with the value “user”) and content (a string containing data entered by the user in the GUI). - Retrieve Chat API’s response.

Chat API returns an object with different properties, but the most important data for the user — the response to the question — is sent in the choices property. This is an array that contains objects with a message property. The message property contains the response to the user’s question.

It is represented as an object with role and content properties. The role property contains the value “assistant,” and the content property includes an answer to the user’s question.

Chat API Use Case

You could use Chat API to create a general conversational chatbot that can answer questions about different topics and be embedded in a website or application.

6

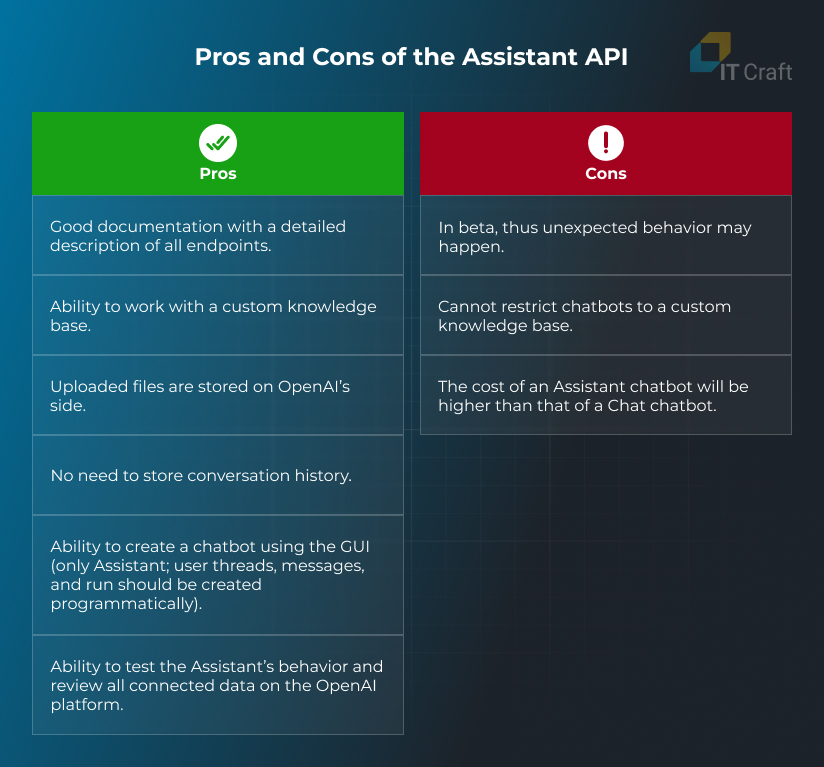

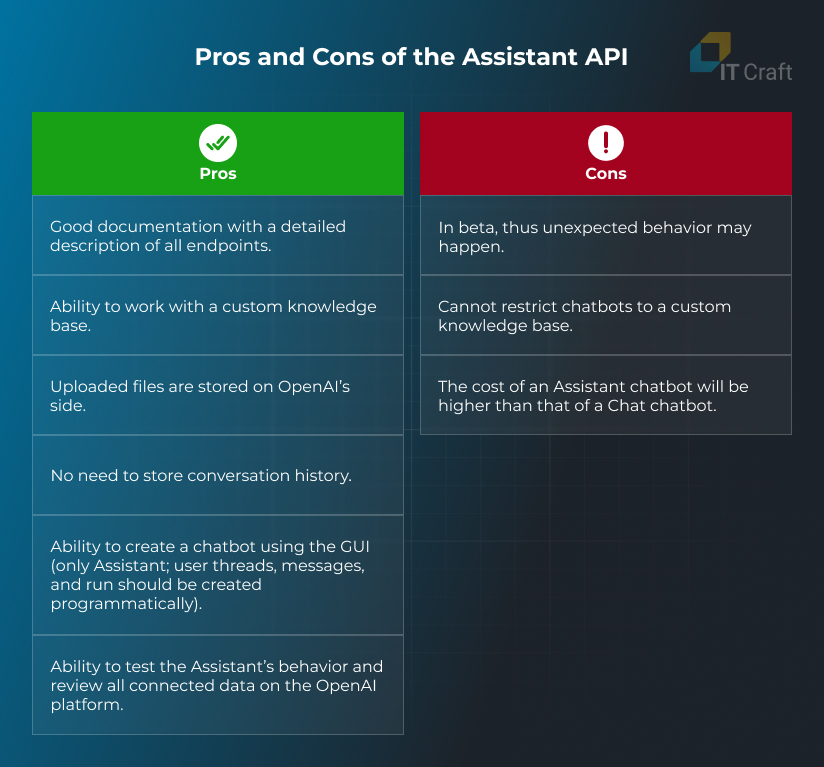

Creating a Chatbot Using OpenAI’s Assistant API

This approach offers more features, such as working with persistent threads, using files as an expanded knowledge base, processing user-attached files (e.g., translating file data), and calling tools to respond to queries (e.g., a code interpreter). Persistent threads allow us to store conversation history on OpenAI’s side.

Assistant API is currently in beta, and some unpredictable behavior may occur. As mentioned above, we can upload and use our data as a knowledge base. Still, the OpenAI knowledge base will also be used if the custom data doesn’t contain a relevant answer to the user’s question.

OpenAI provides a good opportunity to test how an Assistant chatbot will work using Playground, review created Assistants on the Assistants page, and view all running threads on the Threads page.

To create a chatbot with Assistant API, we need to do the following:

- Create an Assistant with the necessary instructions.

- Configure the user’s interaction with the Assistant.

Creating an Assistant:

An Assistant can be created by calling the Assistant API or using the OpenAI web interface.

Calling the Assistant API: To create an Assistant, we need to send an object that contains name, instructions, and model properties. For more information, check the Create Assistant API documentation. All requests must include the API key.

To use a custom knowledge base, do the following:

- Include the tools property in the object sent to the Create assistant API (or to the Assistant updating API) with type retrieval.

- Upload files using the Upload file API. The API must include file and purpose properties. The purpose value should be assistants. Learn more in the Upload file API documentation.

- Call the Create assistant file API to create an Assistant file. This API must include the assistant and IDs of the uploaded files.

Creating via the OpenAI platform:

- Go to the Assistants page and select Create.

- Enter the Assistant Name and Instructions and select the OpenAI model that will be used to generate responses.

- If we want to use a custom knowledge base, we need to activate the Retrieval tool and upload files that will be used as the knowledge base.

Configuring User Interactions with the Assistant

Implementing these steps can only be done programmatically. The API key must be sent with each API call.

- Create a user thread: We need to create only one thread for each individual and use the thread.id in further requests for a given user. A new thread for a user who has previously interacted with the chat can be created only if the user initiates a new conversation. We need to call the Create thread API to create a user thread.

- Create messages within a thread: In this step, we will add the user message to the conversation context. We must send an object with role and content properties to the Create message API to do this.

The role should be “user”, and the content should contain a message entered by the user in the GUI. Also, we need to indicate the user’s thread.id. Read the Create message API documentation. - Run the thread and wait for successful completion: This process causes OpenAI to generate a response. To do this, we must call the Create run API and pass thread.id and assistantId as parameters.

To determine if the run was successful, we need to check the run.status (it should be completed). We may need to restrict the time it takes to receive a response and stop generating a response if it takes a long time. Read the Retrieve run API documentation.

Assistant API Use Case

You could use Assistant API to create a chatbot that answers questions related to the context of uploaded files and general questions.

7

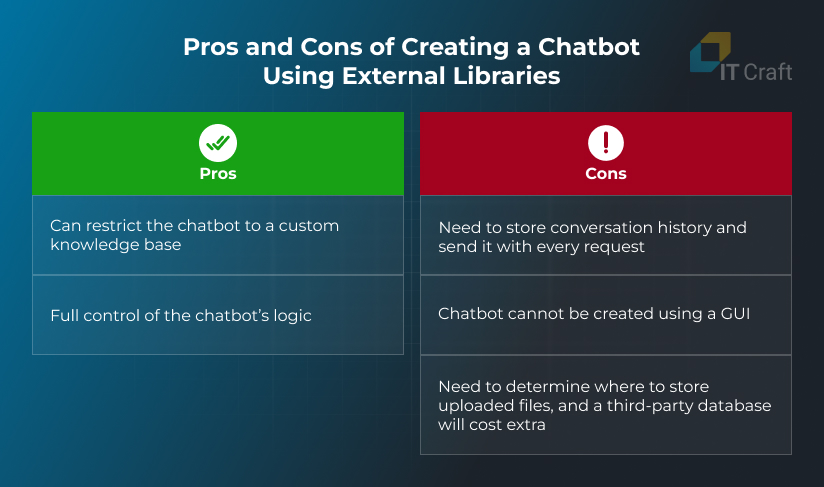

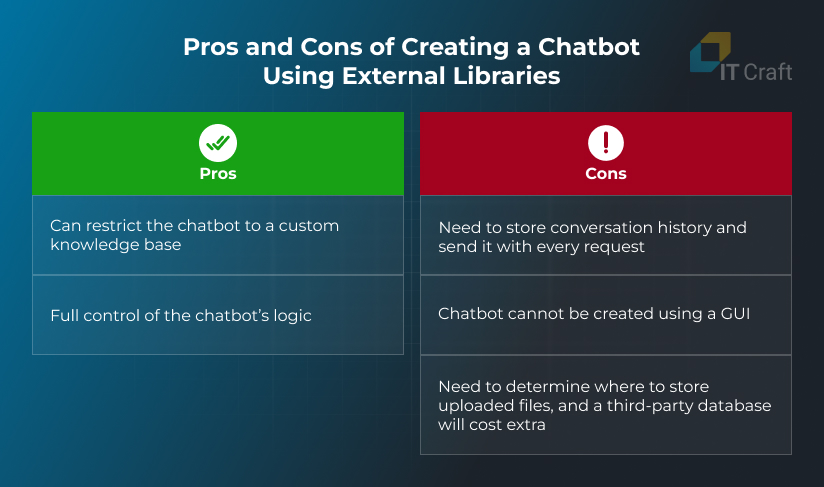

Creating a Chatbot Using External Libraries

This is the most difficult way to create a chatbot. Using this approach, we need to independently determine which libraries to use to process data in different formats (.pdf, .txt, .doc), where this data should be stored (locally or in an external database like Pinecone), find an appropriate method for conducting conversational question-and-answer tasks, and so on.

But in this case, our chatbot’s functionality depends on the solutions we have chosen. If necessary, we can edit and improve certain blocks of logic. For example, we can add text formatting, generate PDF files from chatbot answers, etc. Also, we can limit this option to a specific knowledge base by creating a chatbot.

This way, we can be 100% sure that the chatbot will answer questions based only on previously provided information.

Langchain is the most popular library that can be used to implement this approach. Langchain is a library for developing applications powered by large language models that helps developers build prompts, customize existing templates that OpenAI will use, process and split big text documents, receive data from external sources, convert it into vector embeddings, and much more.

We must create a chatbot that works with a specific data context. Langchain will help us process our data context and transform it into a format that can be understood by OpenAI algorithms.

To create a chatbot using external libraries, we need to do the following:

Preconditions:

Install the langchain library. We need to install a langchain in the development environment. Depending on the programming language we choose, we can do this using the following commands:

- Python: pip install langchain

- Node.Js: npm install langchain

To use a custom knowledge base, we need to do the following:

- Load documents and convert data into vector embeddings. It is possible to use the following langchain library methods: PDFLoader, RecursiveCharacterTextSplitter, and FaissStore.

- If we previously processed documents that should be used in the chatbot, we can load a vector store using the FaissStore and PineconeStore langchain library methods.

If we want to use an external database (Pinecone) to store processed documents (documents in vector format) that will be used as a knowledge database, we need to do the following:

- Log in to the Pinecone Console.

- In the sidebar, select Indexes.

- Create a new Index: Fill in the name and dimensions fields, select the appropriate metric, and select the pod type value. This index will be used to determine where to store documents.

- In the sidebar, select API keys.

- Create an API key to access the Pinecone database. Please, explore the Pinecone documentation.

Base

Create a chain for question-answering tasks using the LLMChain, loadQAChain, ConversationalRetrievalQAChain, and PromptTemplate langchain methods.

Process user-entered data by calling chain.invoke() and passing user data and chat history as a parameter

In this approach, the OpenAI API key is also required. It will be used to process documents and create chains.

External Libraries Use Case

You could use external libraries to create a chatbot that answers questions related to custom-uploaded data. If data wasn’t uploaded for some reason (e.g., the data is out of date and requires modification, so it was temporarily deleted) or a question isn’t related to the data context, the chatbot will say it can’t answer the question.

!

Conclusion

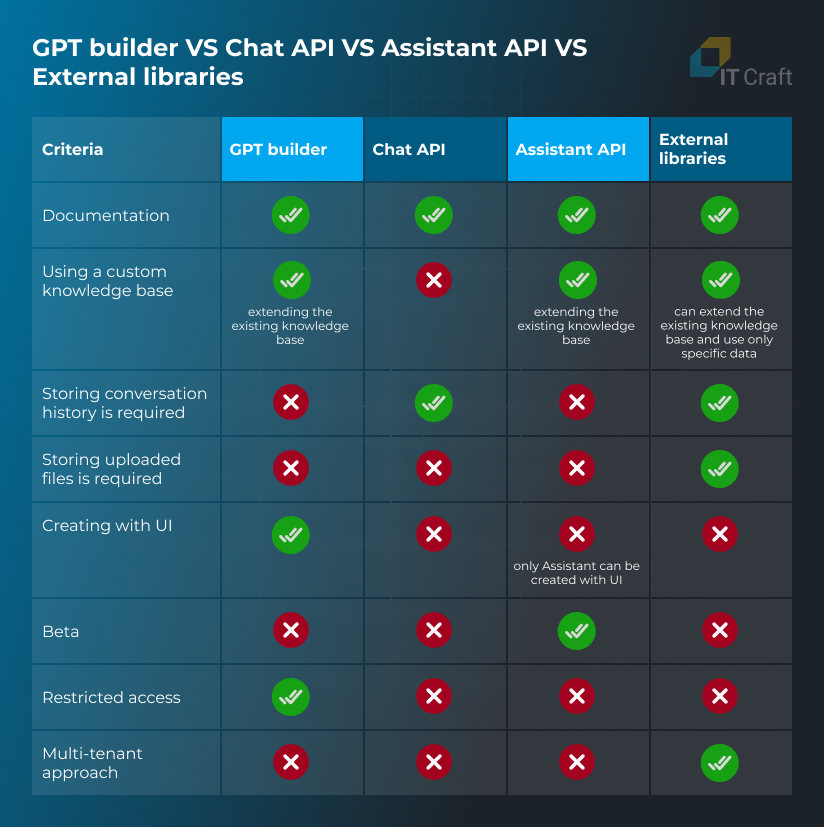

This article explores the advantages and disadvantages of various purposes and methods for using and creating chatbots to help you better understand the key factors in chatbot development.

This knowledge will enable you to select the most appropriate approach based on specific needs and goals. Above, you will find a table summarizing the most significant characteristics of chatbots and their potential implementations to guide your decision-making.